The following italicized paragraphs of this story were written with generative artificial intelligence (Gen-AI). We did this to demonstrate the ability to simply use Gen-AI to create something. In this case, we gave the text program ChatGPT two prompts, or commands.

We told the program to define artificial intelligence and generative artificial intelligence, and also explain how it’s being used for good and bad. Here is how the program responded:

Artificial Intelligence (AI) has rapidly evolved and expanded its influence in our society, and its capabilities are increasingly remarkable. AI refers to the development of computer systems that can perform tasks that would typically require human intelligence.

Generative Artificial Intelligence (AI) refers to a subset of AI technologies and algorithms that have the ability to generate or create new content, such as images, text, videos, and even music.

One significant use of generative AI is in creative fields such as art and design. Artists and designers can leverage generative AI tools to produce unique and visually stunning pieces of artwork or generate novel ideas for creative projects. In the entertainment industry, generative AI is being utilized to enhance special effects, create realistic computer-generated characters, and generate virtual environments.

However, there are potential concerns and drawbacks associated with generative AI. Misuse of the technology could lead to the creation of deepfake videos or deceptive content, posing risks to the integrity of information and potentially enabling malicious activities. Privacy concerns may arise when generative AI is used to create realistic synthetic identities or mimic individuals without their consent.

Now that we know what AI is and what it can do, according to the AI itself, here’s what we can VERIFY about generative AI, which is AI used to generate or create something and in some cases, being used to mislead or misinform people. Here are some tricks from the VERIFY team to help you spot it.

THE SOURCES

- IBM

- An article from Harvard University

- Brookings Institution, a nonprofit public policy organization

- Siwei Lyu, Ph.D, director of the UB Media Forensic Lab at SUNY Buffalo

- Gold Penguin, a marketing company

- Dessa, an AI lab run by CashApp

- AI voice detector

- Undetectable AI Checker

- Originality.ai

- Copyleaks

WHAT WE FOUND

Artificial intelligence is not new. In the 1950s, British mathematician Alan Turing wondered if machines could think like a human, so he developed the “Turing test.” The test pitted a human being against a machine, and the person was tasked with spotting the difference between a human and computer text response.

Since Turing first explored the possibility of machine learning, those technologies have advanced and are used by most people on a daily basis. Some people use traditional AI, which requires rules to be plugged into a program to carry on a specific task. Search engines like Google use traditional AI to return results.

Generative AI (Gen-AI) is a bit different. It requires someone to prompt, or command, the program to create something completely new. Here are some examples of Gen-AI:

- Video game assets, like characters and environments

- Music generation, like Drake and The Weeknd’s “Heart on my Sleeve”

- To create assets or scenes used in advertising – the GOP recently released an attack against President Joe Biden made with Gen-AI

- Chatbots, like when you are chatting with a bank and asking it specific questions to receive specific answers

Siwei Lyu, the director of the UB Media Forensic Lab at SUNY Buffalo and a deepfake expert, told VERIFY while Gen-AI can be helpful, it’s also doing harm when in the wrong hands. It’s being used to “recreate or produce realistic types of media,” including images, video, audio and some text, Lyu said. This includes impersonations or fake content.

Lyu said there are several concerns he has about Gen-AI:

- People can take advantage of someone else by using AI to impersonate someone else or ask for money.

- Falsified news stories written using AI

- Generative deepfake impersonation video and fake photos

“You can use AI models to recreate human faces, making them look like real people, but [is actually] nobody alive,” Lyu said. “You can also use an algorithm to transfer or convert voices using text to voice … You can have videos where the subject is generated by, again, generative AI models to impersonate a particular person.”

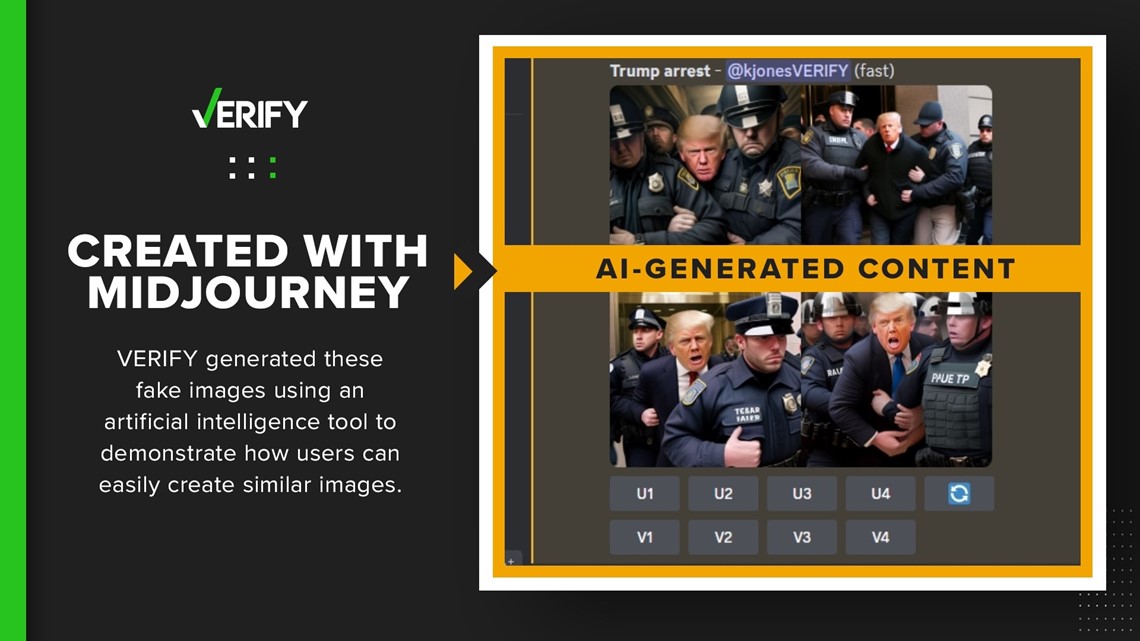

With current tools available, people can create something just with a prompt, or some direction and command, like what VERIFY did to create the introduction to this story. People can use Midjourney, a Gen-AI tool, to generate images just by describing the desired content.

Here is an example of images VERIFY created using Midjourney. It was created when we fact-checked Gen-AI images that were made to show what former President Donald Trump would look like if he were arrested.

Gen-AI is also harder to fact check than other types of manipulated content, Lyu told VERIFY. When VERIFY fact checks images or video, we use tools to determine if it has existed previously on the internet, if it’s been edited from a previous version or being shared out of context. These tools are less effective with Gen-AI, because the content is new.

Lyu went over the different types of Gen-AI and also offered tips on how to spot it.

Video

There are several types of doctored videos that can spread false information. Two of the most common are deepfakes and shallowfakes. A deepfake video is made using Gen-AI, with programs that can be used to replace or synthesize faces, speech or expressions of emotions.

Shallowfakes are created using simple video editing software used to edit together existing videos.

So, how can you tell if a video was created with Gen-AI? There are tells. But first, Lyu says if your gut tells you something is fake, go with your gut. But, if that doesn’t work, here are technical things to look for to tell you if you’re looking at Gen-AI. Lyu calls them artifacts:

- Movement - How is the subject moving in the video? Do their body language and facial expressions appear odd or too perfect?

- Reflection - Can you see any kind of reflection in a person’s eyes, like another person taking the picture or a camera? If not, you could be looking at Gen-AI.

- The hands - Gen-AI tools are notoriously bad for getting hands right, Lyu said. Are they misshapen? Are there too many or not enough fingers?

- Background - What does the background look like? Is it blurry, static or does it appear out of place?

There are tools you can use to detect Gen-AI videos, like deepfakes. Deepware AI’s scanner, which analyzes the video and metadata, tells you if a video posted to YouTube, Twitter or Facebook is a deepfake. VERIFY tested it with a video claiming to show Tom Cruise jumping over Keegan-Michael Key. The tool said it was a deepfake – and it was right.

Audio

Lyu said one of his biggest concerns with Gen-AI is the potential for it to be used to impersonate someone else – that’s a big concern with telephone calls, for instance, because you can’t physically see the other person at the end of the line. Someone could call you, ask for money, and that could be done with Gen-AI.

When it comes to detecting Gen-AI audio, Lyu says listen for breathing. A real person takes breaths when they talk, between words, and makes noises. He also said to listen to the background – is it too quiet? Is it too loud?

AI Voice Detector is a tool that can be used to detect Gen-AI. You can upload an audio recording and the tool analyzes the audio, and determines the likelihood if it’s created using Gen-AI technologies. if someone has a recording of the possible Gen-AI audio.

Here are some tips, previously reported by VERIFY, on how to avoid falling victim to an audio scam:

- First and foremost, be vigilant or skeptical if you receive an unsuspecting or unusual phone call from anybody, including family members.

- Agree on a secret code word or phrase that your family members or business associates will use when they are in trouble. You can then ask someone for the code word before providing any information.

- Hang up and call back the person or company who supposedly contacted you, using a phone number that you know is theirs.

- Ask questions that only the real person would know the answers to, such as anecdotes or information about your last conversation. You can also ask about private information that could not be gathered from a social media account.

Text

Gold Penguin, a marketing company, says AI-generated text is the hardest to detect, but there are tools. Not all of them are 100% reliable, though, and some of them charge a fee to use them. Here are some examples of free tools:

- Undetectable AI Checker: To use this, you can paste the text in the box and submit it for testing. When VERIFY copied the introduction of this article, the checker told us “your content was detected as written by AI.” It was.

- Copyleaks: This is another free tool that works the same way. This tool also told us the introduction of this story was created with AI.

- Open.AI text classifier: This tool works the same way as the others, but requires at least 1,000 characters in the sample.

- Grammarly’s plagiarism checker: A tool for teachers to determine whether school assignments have been plagiarized or written with AI

If you have questions about AI, or if you think something was created with AI and want us to check it out, email us at questions@verifythis.com or tag us on social media @verifythis.